Designing Control Without Killing Authenticity

My Role

research, synthesis, narrative design, prototype iteration

collaborated with Bjarki & Steph

Tools

Figma • FigJam • Figma Make • Google Antigravity • Notion + Google Docs • Zoom • Respondent • Prolific • Miro

Timeframe

Two sprint cycles (1 month)

Scale Social AI turns guest photos and videos into short form content for multi location brands. Diners upload, staff reinforce participation, AI generates Reels, and an admin portal manages approvals. In reality, the results are uneven. Some partners see five times return on investment. Others barely ship a Reel a month because uploads, approvals, or both keep stalling.

Our sprint asked a simple question:

Who are Scale Social’s ideal partners,

and what do they need to succeed with AI assisted UGC?

My role across sprints was to help shape the story, synthesize what we heard from users and internal emails, and turn a fuzzy idea of "brand control" into a clearer set of control models that could actually live in the product. I also owned an iteration of the clickable prototype that simplified those controls.

.png)

From previous rounds, our team already knew a few things:

1. Social content matters, but it competes with many other priorities.

2. Upload volume and content mix are inconsistent across locations.

3. Approvals are complex and often slow.

4. Scale Social is moving from individual locations toward brand parent plus franchise models.

So the question was not "does Scale Social work". The question was "for whom, and under what kind of control".

.png)

We framed this sprint around three research questions:

.png)

I helped turn these into interview prompts and prototype flows, and later into the narrative that anchored our presentation.

We interviewed three high-leverage roles: a healthcare-hospitality marketing director, a 1,600-location F&B franchise lead, and an automotive compliance consultant.

.png)

These are not store managers. They design and enforce the rules that stores live under. I emphasized that distinction in the story, because it means our insights apply across very large networks, not just one restaurant at a time.

Across industries, three themes kept appearing.

In one of our clips, a participant literally says "I like authenticity", which we captured on a slide with our prototype in the background.

For them, authenticity means:

- content that feels like real people in real locations

- posts that match the brand’s tone

- nothing that looks like generic AI filler

Some teams wanted lots of oversight.

Some wanted to move faster with guidance.

None were ready for fully blind automation.

Everyone had stories about slow reviews, scattered feedback across email and chat, and last minute legal concerns. The problem was not only "do we have content" but "can we say yes in time for this to matter".

My job in synthesis was to link these three ideas. If authenticity is sacred and approvals are painful, then the product’s control model is the bridge between them.

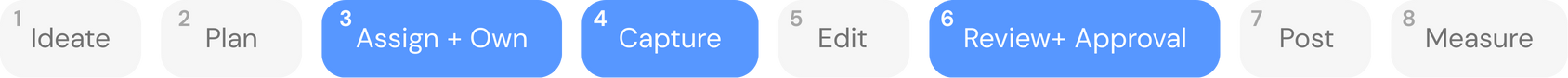

We mapped an end to end workflow from ideate to measure, then highlighted the trouble spots: Assign and own, Capture, and Review and approval.

- At "Assign and own", requests bypass formal systems and land in side channels.

- At "Capture", inputs depend on external contributors whose priorities and skills vary.

- At "Review and approval", multi layer signoff slows everything down and makes status hard to track.

Next, we tested how teams wanted to set guardrails.

.png)

We created a brand guidelines and guardrails prototype that let participants define

tone & voice

caption rules

CTAs

guardrails

words/phrases to avoid

content themes

emoji & hashtag rules...

.png)

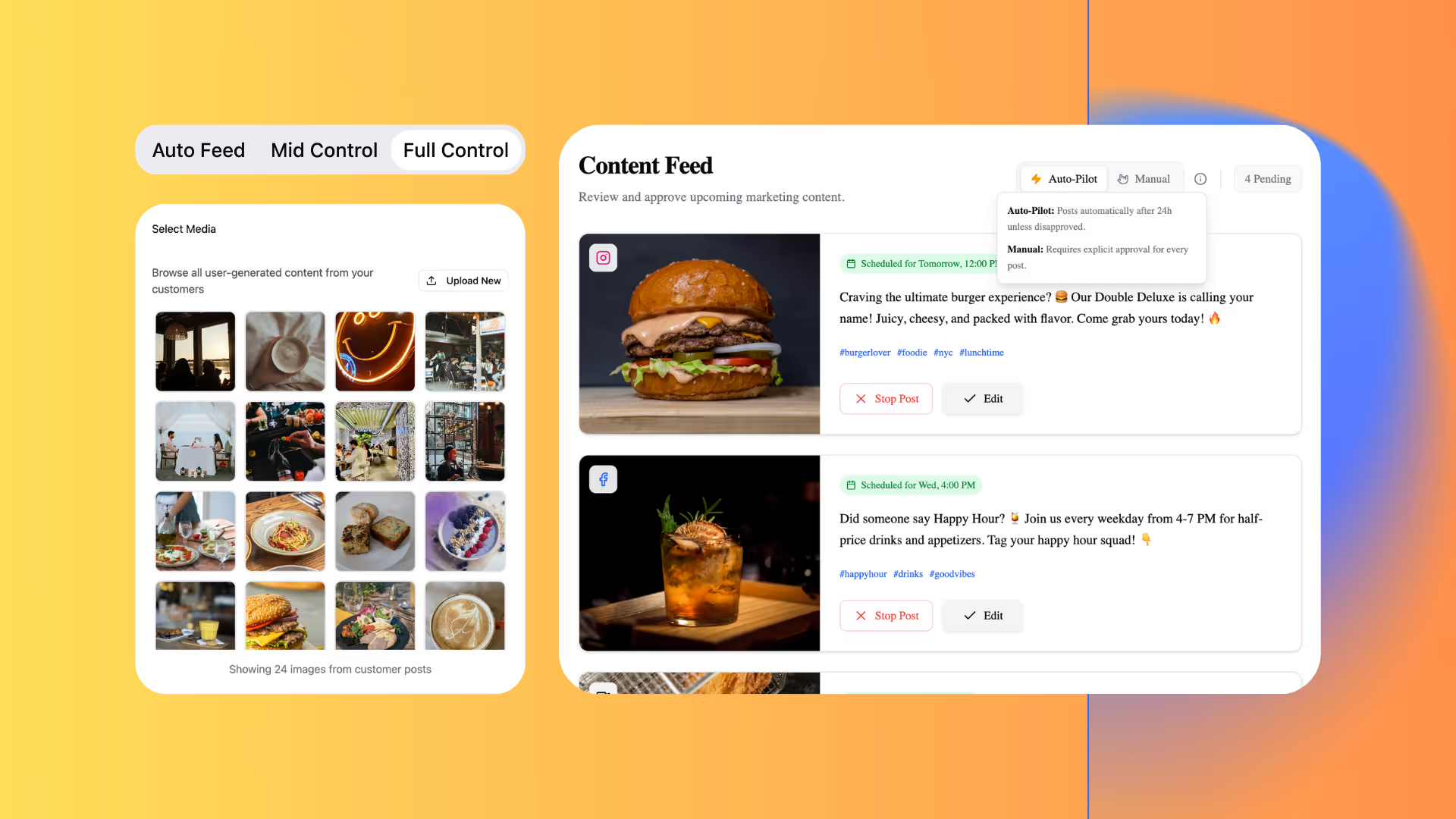

We also showed three content approval models:

fully automated

guided

full human review

When I brought the prototype into our sessions with the three interviewees, the response was extremely encouraging. Each of them reacted positively to the clarity of the control models and the way the prototype framed authenticity. More importantly, they gave specific and actionable suggestions that shaped my next iteration. Their feedback helped refine the routing logic, simplify language around approvals, and add transparency to the guardrail settings. Those conversations directly informed the version of the prototype I developed afterward.

From these sessions, we learned that not all settings are equal.

We grouped them into:

Core must haves like tone and voice, caption expectations, key topics and banned words.

Recommended nice to have options like emoji and hashtag logic.

Advanced controls for high risk teams like music, audio, and extra guardrails for sensitive categories.

Participants wanted presets but insisted on the ability to customize quickly, especially when managing multiple concepts under one parent brand. That reinforced the idea that control should start from shared rules but stay flexible per concept.

The first clickable prototype I brought into interviews had three control modes. On paper, they covered the spectrum from low to high control. In practice, users did not think in three neat buckets.

During the sessions, all three participants gave positive reactions to the idea of control settings. They liked seeing options, especially around music and creative decisions. At the same time, their comments exposed a pattern:

- The "mid" mode was hard to reason about.

- The full automation mode felt like something they might reach one day, but not as a starting point.

- The full control mode was where they felt safest right now.

.png)

One quote captured it nicely:

"Maybe one day we could get to the mid control or auto feed, but I do not think we are there yet as a brand. I think we would definitely want to be involved."

That line became a turning point for my iteration.

In my next iteration I simplified the model from three modes to two clear control levels that match how these leaders actually decide:

Manual: A Guided level where the system proposes content and humans approve or lightly edit.

Auto-Poliot: A Streamlined level for brands that are ready to move faster once they trust the AI.

.png)

Several of them talked about needing to push approved content into other tools. I added hooks in the prototype for sending posts to external platforms and schedulers, so the control model connected all the way through to execution instead of ending at “approved.”

.png)

Interviewees wanted a fast way to say “close, but not quite.” I added a regenerate action directly in the review view, so they could quickly cycle through new options without leaving the flow or rewriting the brief.

.png)

In the first version, the brand guideline form felt long and flat. I regrouped it into clearer sections (voice and tone, do / don’t language, visual and caption rules) and tightened the hierarchy. During interviews, this layout tested much better. They called out the guidelines as “actually usable” instead of “another giant form.”

.png)

We framed it this way:

- There is a trust gap. Blind automation feels risky for brand safety.

- Full and mid level controls help us demonstrate AI quality without risk. Every accepted suggestion builds trust.

- As teams see content that matches their voice, they naturally consider moving toward more automation.

- Automation can eventually become a premium add on for mature, high confidence customers.

My contribution was to connect the dots between user quotes, control designs, and this adoption story, so the product conversation moved from "should we automate" to "when and for whom should we automate".

A few personal takeaways from this sprint:

Takeaway 1

Authenticity needs structure. You cannot promise "real" content if the system does not protect it.

Takeaway 2

Control feels safe when routing is clear, not when there are more steps.

Takeaway 3

Cross industry interviews can reveal shared patterns faster than staying in one vertical.

Takeaway 4

Narrative work is not just pretty slides. A coherent story helps stakeholders reason about abstract concepts like trust, control, and readiness for automation.

Businesses that get control right in their UGC marketing are not mystified by authenticity. They cultivate it.

This sentence captures what this sprint was about: designing systems that let AI help, without losing the human parts that make content worth watching in the first place.